Classificaiton_Naive Bayes

This post demensatrate some basic process of Land Use and Land Cover classification using Naive Bayes in Python.

Introduction

In this chapter we will classify the Sentinel-2 image we’ve been working with using a supervised classification approach which incorporates the training data we worked with in chapter 4. Specifically, we will be using Naive Bayes. Naive Bayes predicts the probabilities that a data point belongs to a particular class and the class with the highest probability is considered as the most likely class. The way they get these probabilities is by using Bayes’ Theorem, which describes the probability of a feature, based on prior knowledge of conditions that might be related to that feature. Naive Bayes is quite fast when compared to some other machine learning approaches (e.g., SVM can be quite computationally intensive). This isn’t to say that it is the best per se; rather it is a great first step into the world of machine learning for classification and regression.

scikit-learn

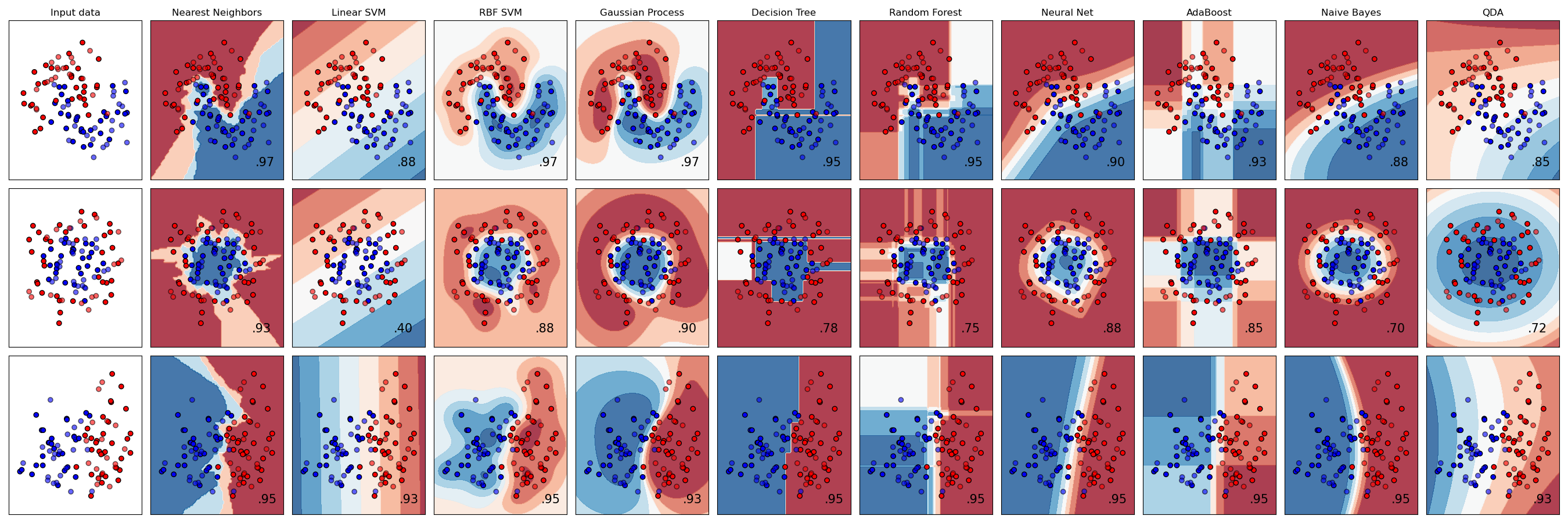

In this chapter we will be using the Naive Bayes implementation provided by the scikit-learn library. scikit-learn is an amazing machine learning library that provides easy and consistent interfaces to many of the most popular machine learning algorithms. It is built on top of the pre-existing scientific Python libraries, including numpy, scipy, and matplotlib, which makes it very easy to incorporate into your workflow. The number of available methods for accomplishing any task contained within the library is (in my opinion) its real strength. No single algorithm is best for all tasks under all circumstances, and scikit-learn helps you understand this by abstracting the details of each algorithm to simple consistent interfaces. For example:

This figure shows the classification predictions and the decision surfaces produced for three classification problems using 9 different classifiers. What is even more impressive is that all of this took only about 110 lines of code, including comments!

Training the Classifier

Now that we have our X matrix of feature inputs (the spectral bands) and our y array (the labels), we can train our model.

Visit this web page to find the usage of GaussianNaiveBayes Classifier from scikit-learn.

1 | |

It is that simple to train a classifier in scikit-learn! The hard part is often validation and interpretation.

Predicting on the image

With our Naive Bayes classifier fit, we can now proceed by trying to classify the entire image:

We’re only going to open the subset of the image we viewed above because otherwise it is computationally too intensive for most users.

1 | |

1 | |